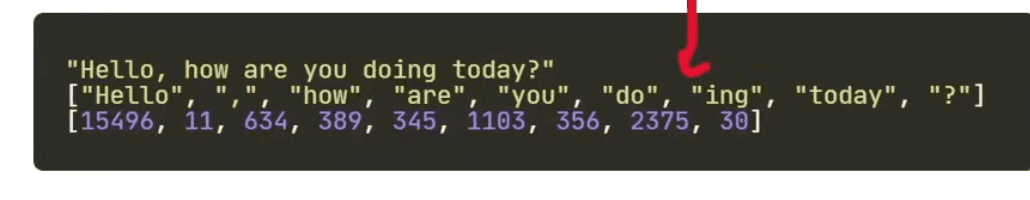

Breaking input text into smaller parts

Each model has a unique ID for tokens, part of model’s vocabulary

Byte Pair Encoding - GPT3 Word Piece - BERT Sentence Piece - T5 and GPT3.5

The input text must be converted into tokens, which have to match with model’s vocabulary