A Foundational Model that implements Transformer Architecture

- The model is trained with grammer, language semantics, word usage, style, tone, etc.

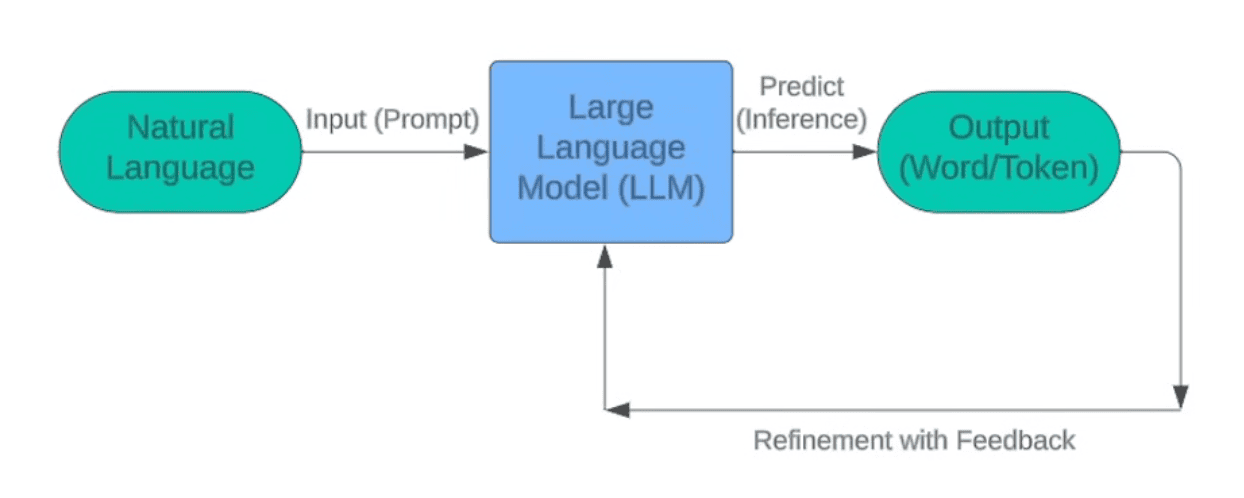

Researchers don’t know how LLM does reasoning, but its simple to say that LLM always predicts the next word, as shown in the above image